Prefer to listen instead? Here’s the podcast version of this article.

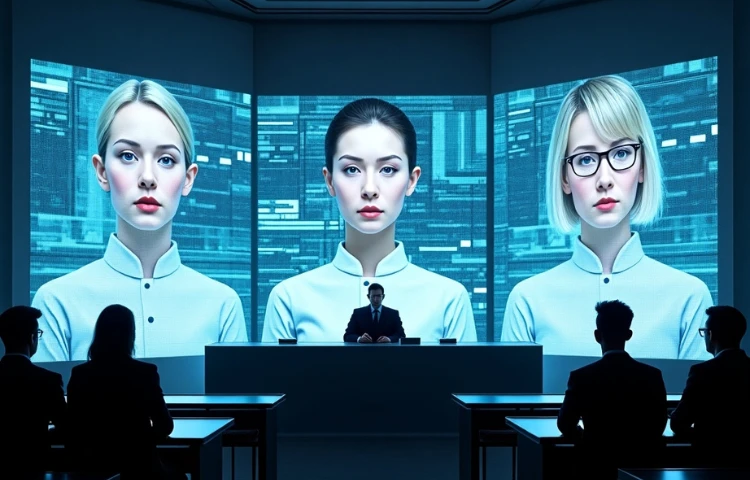

In the ever-evolving landscape of artificial intelligence (AI), one topic has recently taken center stage across social media platforms: the ethical implications and regulatory responses to AI-generated explicit content, particularly deepfakes. This surge in attention stems from growing concerns about nonconsensual imagery and the potential misuse of AI technologies.

Deepfakes utilize AI to create hyper-realistic but fabricated images or videos, often placing individuals into compromising scenarios without their consent. The proliferation of such content has sparked debates about privacy, consent, and the need for stringent regulations to curb misuse.

The state is considering a bipartisan bill aimed at penalizing companies that allow users to create explicit content without consent. This move comes in response to incidents where individuals discovered AI-generated explicit images of themselves circulating online without their approval. [apnews.com+1thetimes.co.uk+1]

The former First Lady has actively supported the “Take It Down Act,” a bipartisan federal bill designed to combat nonconsensual sexual images, especially deepfakes. Her involvement underscores the severity of the issue and the necessity for comprehensive legal measures. [businessinsider.com+1thetimes.co.uk+1]

Alexis Ohanian, co-founder of Reddit, has advocated for employing AI to moderate social media content. He envisions AI tools that allow users to adjust their content preferences, potentially mitigating the spread of harmful material, including nonconsensual explicit content. [thetimes.co.uk+3businessinsider.com+3businessinsider.com+3]

The convergence of AI advancements and ethical considerations necessitates a balanced approach:

Robust Legislation: Implementing laws that deter the creation and distribution of nonconsensual explicit content is crucial. Such legislation should hold perpetrators accountable while protecting victims’ rights.

Technological Safeguards: Developing AI-driven detection systems can help identify and remove deepfake content swiftly, reducing potential harm.

Public Awareness: Educating users about the existence and risks of AI-generated explicit content can empower them to recognize and report such material, fostering a safer online environment.

AI’s rapid advancement presents both incredible opportunities and serious ethical challenges. The rise of deepfake explicit content highlights the urgent need for stronger regulations, better detection tools, and widespread public awareness. Governments, tech companies, and advocacy groups must work together to ensure AI is used responsibly, protecting individuals from exploitation and preserving digital trust.

WEBINAR